TOG (32), TVCG (45), SIGGRAPH/SA - Conference Track (6) | CHI/UIST/IMWUT/VR (17) | PAMI (4), CVPR/ICCV/ECCV/AAAI (18; Oral/Highlight: 6)

For the most updated list of publications, please check out my

Google Scholar page.

Feng-Lin Liu, Shi-Yang Li, Yan-Pei Cao, Hongbo Fu, and Lin Gao. Sketch3DVE: Sketch-based 3D-Aware Scene Video Editing. ACM SIGGRAPH 2025 Conference Papers. Article No. 152. December 2025. |

|

|

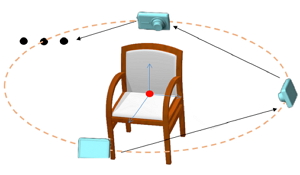

Abstract: Recent video editing methods achieve attractive results in style transfer or appearance modification. However, editing the structural content of 3D scenes in videos remains challenging, particularly when dealing with significant viewpoint changes, such as large camera rotations or zooms. Key challenges include generating novel view content that remains consistent with the original video, preserving unedited regions, and translating sparse 2D inputs into realistic 3D video outputs. To address these issues, we propose Sketch3DVE, a sketch-based 3D-aware video editing method to enable detailed local manipulation of videos with significant viewpoint changes. To solve the challenge posed by sparse inputs, we employ image editing methods to generate edited results for the first frame, which are then propagated to the remaining frames of the video. We utilize sketching as an interaction tool for precise geometry control, while other mask-based image editing methods are also supported. To handle viewpoint changes, we perform a detailed analysis and manipulation of the 3D information in the video. Specifically, we utilize a dense stereo method to estimate a point cloud and the camera parameters of the input video. We then propose a point cloud editing approach that uses depth maps to represent the 3D geometry of newly edited components, aligning them effectively with the original 3D scene. To seamlessly merge the newly edited content with the original video while preserving the features of unedited regions, we introduce a 3D-aware mask propagation strategy and employ a video diffusion model to produce realistic edited videos. Extensive experiments demonstrate the superiority of Sketch3DVE in video editing.

|

Feng-Lin Liu, Hongbo Fu, Xintao Wang, Weicai Ye, Pengfei Wan, Di Zhang, and Lin Gao. SketchVideo: Sketch-based Video Generation and Editing. CVPR 2025. Nashville TN, USA. June 2025. |

|

|

Abstract: Video generation and editing conditioned on text prompts or images have undergone significant advancements. However, challenges remain in accurately controlling global layout and geometry details solely by texts, and supporting motion control and local modification through images. In this paper, we aim to achieve sketch-based spatial and motion control for video generation and support fine-grained editing of real or synthetic videos. Based on the DiT video generation model, we propose a memory-efficient control structure with sketch control blocks that predict residual features of skipped DiT blocks. Sketches are drawn on one or two keyframes (at arbitrary time points) for easy interaction. To propagate such temporally sparse sketch conditions across all frames, we propose an inter-frame attention mechanism to analyze the relationship between the keyframes and each video frame. For sketch-based video editing, we design an additional video insertion module that maintains consistency between the newly edited content and the original video's spatial feature and dynamic motion. During inference, we use latent fusion for the accurate preservation of unedited regions. Extensive experiments demonstrate that our SketchVideo achieves superior performance in controllable video generation and editing. |

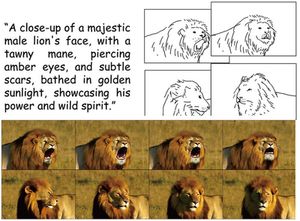

Dong Liang, Jinyuan Jia, Yuhao Liu, Zhanghan Ke, Hongbo Fu, and Rynson Lau. VODiff: Controlling Object Visibility Order in Text-to-Image Generation. CVPR 2025. Nashville TN, USA. June 2025. |

|

|

Abstract: Recent advancements in diffusion models have significantly enhanced the performance of text-to-image models in image synthesis. To enable control over the the spatial locations of the generated objects, diffusion-based methods typically utilize object layout as an auxiliary input. However, we observe that this approach treats all objects as being on the same layer and neglect their visibility order, leading to the synthesis of overlapping objects with incorrect occlusions. To address this limitation, we introduce in this paper a new training-free framework that considers object visibility order explicitly and allows users to place overlapping objects in a stack of layers. Our framework consists of two visibility-based designs. First, we propose a novel Sequential Denoising Process (SDP) to divide the whole image generation into multiple stages for different objects, each stage primarily focuses on an object. Second, we propose a novel Visibility-Order-Aware (VOA) Loss to transform the layout and occlusion constraints into an attention map optimization process to improve the accuracy of synthesizing object occlusions in complex scenes. By merging these two novel components, our framework, dubbed VODiff, enables the generation of photorealistic images that satisfy user-specified spatial constraints and object occlusion relationships. In addition, we introduce VOBench, a diverse benchmark dataset containing 200 curated samples, each with a reference image, text prompts, object visibility orders and layout maps. We conduct extensive evaluations on this dataset to demonstrate the superiority of our approach. |

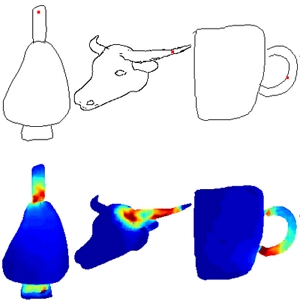

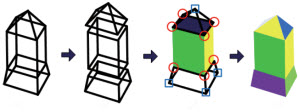

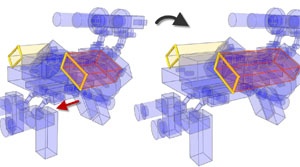

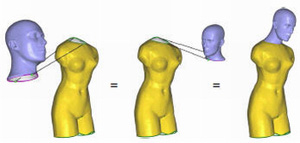

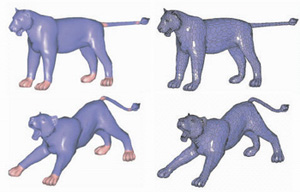

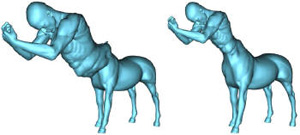

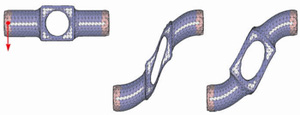

Lin Gao, Jie Yang, Bo-Tao Zhang, Jia-Mu Sun, Yu-Jie Yuan, Hongbo Fu, and Yu-Kun Lai. Real-time

Large-scale Deformation for Gaussian Splatting. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH Asia 2024. 43(6). Article No. 200. December 2024. |

|

|

Abstract: Neural implicit representations, including Neural Distance Fields and Neural Radiance Fields, have demonstrated significant capabilities for reconstructing surfaces with complicated geometry and topology, and generating novel views of a scene. Nevertheless, it is challenging for users to directly deform or manipulate these implicit representations with large deformations in a real-time fashion. Gaussian Splatting (GS) has recently become a promising method with explicit geometry for representing static scenes and facilitating high-quality and real-time synthesis of novel views. However, it cannot be easily deformed due to the use of discrete Gaussians and the lack of explicit topology. To address this, we develop a novel GS-based method that enables interactive deformation. Our key idea is to design an innovative mesh-based GS representation, which is integrated into Gaussian learning and manipulation. 3D Gaussians are defined over an explicit mesh, and they are bound with each other: the rendering of 3D Gaussians guides the mesh face split for adaptive refinement, and the mesh face split directs the splitting of 3D Gaussians. Moreover, the explicit mesh constraints help regularize the Gaussian distribution, suppressing poor-quality Gaussians (e.g. , misaligned Gaussians, long-narrow shaped Gaussians), thus enhancing visual quality and reducing artifacts during deformation. Based on this representation, we further introduce a large-scale Gaussian deformation technique to enable deformable GS, which alters the parameters of 3D Gaussians according to the manipulation of the associated mesh. Our method benefits from existing mesh deformation datasets for more realistic data-driven Gaussian deformation. Extensive experiments show that our approach achieves highquality reconstruction and effective deformation, while maintaining the promising rendering results at a high frame rate (65 FPS on average on a single commodity GPU). |

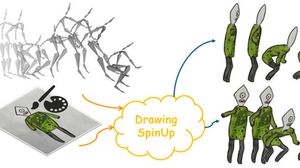

Jie Zhou*, Chufeng Xiao*, Miu-Ling Lam, and Hongbo Fu. DrawingSpinUp: 3D Animation from Single Character Drawings. ACM SIGGRAPH Asia 2024 Conference Papers. Article No. 98. December 2024. |

|

|

Abstract: Animating various character drawings is an engaging visual content creation task. Given a single character drawing, existing animation methods are limited to flat 2D motions and thus lack 3D effects. An alternative solution is to reconstruct a 3D model from a character drawing as a proxy and then retarget 3D motion data onto it. However, the existing image-to-3D methods could not work well for amateur character drawings in terms of appearance and geometry. We observe the contour lines, commonly existing in character drawings, would introduce significant ambiguity in texture synthesis due to their view-dependence. Additionally, thin regions represented by single-line contours are difficult to reconstruct (e.g., slim limbs of a stick figure) due to their delicate structures. To address these issues, we propose a novel system, DrawingSpinUp, to produce plausible 3D animations and breathe life into character drawings, allowing them to freely spin up, leap, and even perform a hip-hop dance. For appearance improvement, we adopt a removal-then-restoration strategy to first remove the view-dependent contour lines and then render them back after retargeting the reconstructed character. For geometry refinement, we develop a skeleton-based thinning deformation algorithm to refine the slim structures represented by the single-line contours. The experimental evaluations show that our method outperforms the existing 2D and 3D animation methods and generates high-quality 3D animations from single character drawings.

|

Zhiyi Kuang, Yanchao Yang, Siyan Dong, Jiayue Ma, Hongbo Fu, and Youyi Zheng. OLAT Gaussians for

Generic Relightable Appearance Acquisition. ACM SIGGRAPH Asia 2024 Conference Papers. Article No. 13. December 2024. |

|

|

Abstract: One-light-at-a-time (OLAT) images sample a broader range of object appearance changes than images captured under constant lighting and are superior as input to object relighting. Although existing methods have produced reasonable relighting quality using OLAT images, they utilize surface-like representations, limiting their capacity to model volumetric objects, such as furs. Besides, their rendering process is time-consuming and still far from being used in real-time applications. To address these issues, we propose OLAT Gaussians to build relightable representations of objects from multi-view OLAT images. We build our pipeline on 3D Gaussian Splatting (3DGS), which achieves real-time high-quality rendering. To augment 3DGS with relighting capability, we assign each Gaussian a learnable feature vector, serving as an index to query the objects' appearance field. Specifically, we decompose the appearance field into an incident illumination function and a scattering function. The former accounts for light transmittance and fore-shortening effects, while the latter represents the object's material properties to scatter light. Rather than using an off-the-shelf physically-based parametric rendering formulation, we model both functions using multi-layer perceptrons (MLPs). This makes our method suitable for various objects, e.g., opaque surfaces, semi-transparent volumes, furs, fabrics, etc. Given a camera view and a point light position, we compute each Gaussian's color as the product of the light intensity, the incident illumination value, and the scattering value, and then render the target image through the 3DGS rasterizer. To enhance rendering quality, we further utilize a proxy mesh to provide OLAT Gaussians with normals to improve highlights and visibility cues to improve shadows. Extensive experiments demonstrate that our method produces state-of-the-art rendering quality with significantly more details in texture-rich areas than previous methods. Our method also achieves real-time rendering, allowing users to interactively modify camera views and point light positions to get immediate rendering results, which are not available from the offline rendering of previous methods.

|

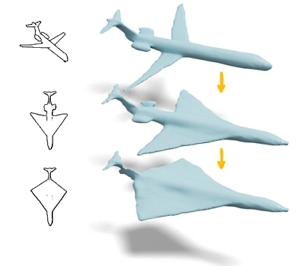

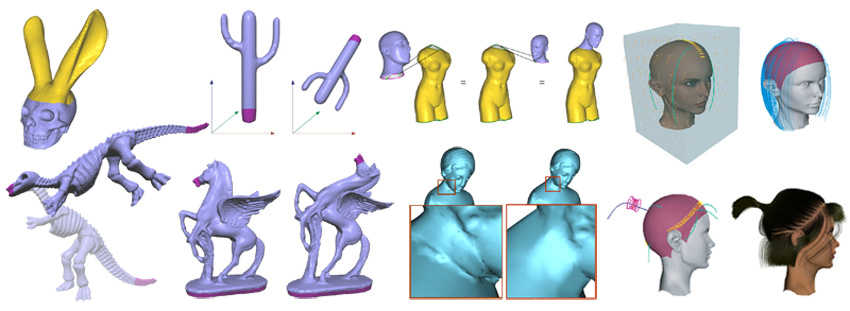

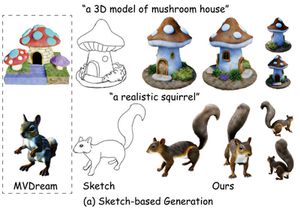

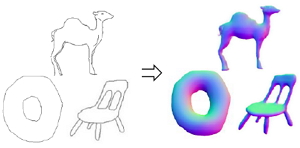

Feng-Lin Liu, Hongbo Fu, Yun-Kun Lai, and Lin Gao. SketchDream: Sketch-based Text-to-3D Generation and Editing. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2024. 43(4). Article No. 44. July-August 2024. |

|

|

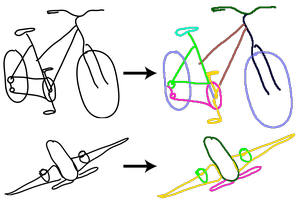

Abstract: Existing text-based 3D generation methods generate attractive results but lack detailed geometry control. Sketches, known for their conciseness and expressiveness, have contributed to intuitive 3D modeling but are confined to producing texture-less mesh models within predefined categories. Integrating sketch and text simultaneously for 3D generation promises enhanced control over geometry and appearance but faces challenges from 2D-to-3D translation ambiguity and multi-modal condition integration. Moreover, further editing of 3D models in arbitrary views will give users more freedom to customize their models. However, it is difficult to achieve high generation quality, preserve unedited regions, and manage proper interactions between shape components. To solve the above issues, we propose a text-driven 3D content generation and editing method, SketchDream, which supports NeRF generation from given hand-drawn sketches and achieves free-view sketch-based local editing. To tackle the 2D-to-3D ambiguity challenge, we introduce a sketch-based multi-view image generation diffusion model, which leverages depth guidance to establish spatial correspondence. A 3D ControlNet with a 3D attention module is utilized to control multi-view images and ensure their 3D consistency. To support local editing, we further propose a coarse-to-fine editing approach: the coarse phase analyzes component interactions and provides 3D masks to label edited regions, while the fine stage generates realistic results with refined details by local enhancement. Extensive experiments validate that our method generates higher-quality results compared with a combination of 2D ControlNet and image-to-3D generation techniques and achieves detailed control compared with existing diffusion-based 3D editing approaches. |

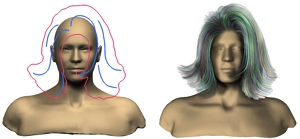

Keyu Wu, Lingchen Yang, Zhiyi Kuang, Yao Feng, Xutao Han, Yuefan Shen, Hongbo Fu, Kun Zhou, and Youyi Zheng. MonoHair: High-Fidelity Hair Modeling from a Monocular Video. CVPR 2024. June 2024. Oral Presentation |

|

|

Abstract: Undoubtedly, high-fidelity 3D hair is crucial for achieving realism, artistic expression, and immersion in computer graphics. While existing 3D hair modeling methods have achieved impressive performance, the challenge of achieving high-quality hair reconstruction persists: they either require strict capture conditions, making practical applications difficult, or heavily rely on learned prior data, obscuring fine-grained details in images. To address these challenges, we propose a generic framework to achieve high-fidelity hair reconstruction from a monocular video, without specific requirements for environments. Our approach bifurcates the hair modeling process into two main stages: precise exterior reconstruction and interior structure inference. The exterior is meticulously crafted using our Patch-based Multi-View Optimization (PMVO). This method strategically collects and integrates hair information from multiple views, independent of prior data, to produce a high-fidelity exterior 3D line map. This map not only captures intricate details but also facilitates the inference of the hair’s inner structure. For the interior, we employ a data-driven, multi-view 3D hair reconstruction method. This method utilizes 2D structural renderings derived from the reconstructed exterior, mirroring the synthetic 2D inputs used during training. This alignment effectively bridges the domain gap between our training data and real-world data, thereby enhancing the accuracy and reliability of our interior structure inference. Lastly, we generate a strand model and resolve the directional ambiguity by our hair growth algorithm. Our experiments demonstrate that our method exhibits robustness across diverse hairstyles and achieves state-of-the-art performance. |

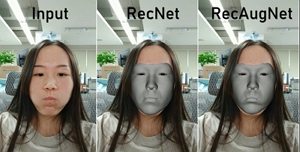

Ziqi Cai, Kaiwen Jiang, Shu-Yu Chen, Yu-Kun Lai, Hongbo Fu, and Lin Gao. Real-time 3D-aware Portrait Video Relighting. CVPR 2024. June 2024. Poster Highlight |

|

|

Abstract: Synthesizing realistic videos of talking faces under custom lighting conditions and viewing angles benefits various downstream applications like video conferencing. However, most existing relighting methods are either time-consuming or unable to adjust the viewpoints. In this paper, we present the first real-time 3D-aware method for relighting in-the-wild videos of talking faces based on Neural Radiance Fields (NeRF). Given an input portrait video, our method can synthesize talking faces under both novel views and novel lighting conditions with a photo-realistic and disentangled 3D representation. Specifically, we infer an albedo tri-plane, as well as a shading tri-plane based on a desired lighting condition for each video frame with fast dual-encoders. We also leverage a temporal consistency network to ensure smooth transitions and reduce flickering artifacts. Our method runs at 32.98 fps on consumer-level hardware and achieves state-of-the-art results in terms of reconstruction quality, lighting error, lighting instability, temporal consistency and inference speed. We demonstrate the effectiveness and interactivity of our method on various portrait videos with diverse lighting and viewing conditions. |

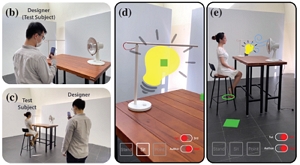

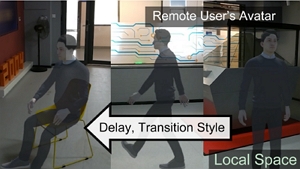

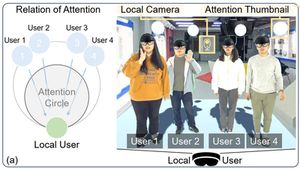

Hui Ye*, Jia Ye Leng*, Pengfei Xu, Karan Singh, and Hongbo Fu. ProInterAR: A Visual Programming Platform for Creating Immersive AR Interactions. CHI 2024. Article No. 610. May 2024. |

|

|

Abstract: AR applications commonly contain diverse interactions among different AR contents. Creating such applications requires creators to have advanced programming skills for scripting interactive behaviors of AR contents, repeated transferring and adjustment of virtual contents from virtual to physical scenes, testing by traversing between desktop interfaces and target AR scenes, and digitalizing AR contents. Existing immersive tools for prototyping/authoring such interactions are tailored for domain-specific applications. To support programming general interactive behaviors of real object(s)/environment(s) and virtual object(s)/environment(s) for novice AR creators, we propose ProInterAR, an integrated visual programming platform to create immersive AR applications with a tablet and an AR-HMD. Users can construct interaction scenes by creating virtual contents and augmenting real contents from the view of an AR-HMD, script interactive behaviors by stacking blocks from a tablet UI, and then execute and control the interactions in the AR scene. We showcase a wide range of AR application scenarios enabled by ProInterAR, including AR game, AR teaching, sequential animation, AR information visualization, etc. Two usability studies validate that novice AR creators can easily program various desired AR applications using ProInterAR. |

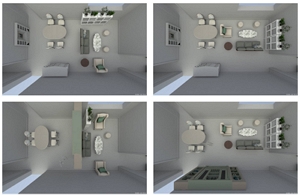

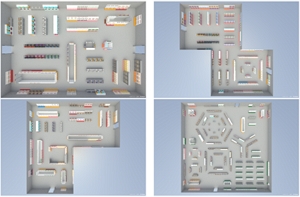

Shao-Kui Zhang, Jia-Hong Liu, Yike Li, Tianyi Xiong, Ke-Xin Ren, Hongbo Fu, and Song-Hai Zhang. Automatic Generation of Commercial Scenes. MM 2023. 1137-1147. Oct. 2023. |

|

|

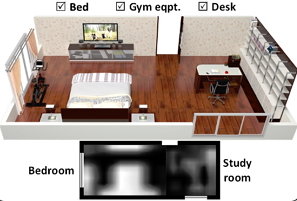

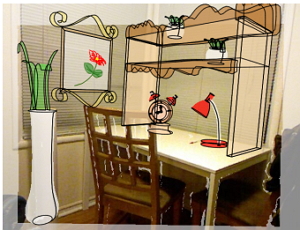

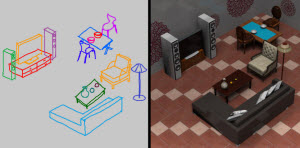

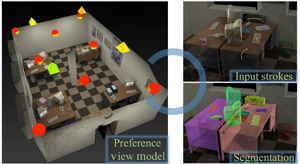

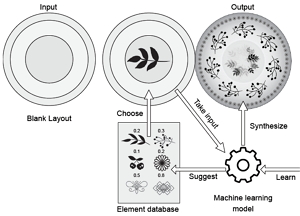

Abstract: Commercial scenes such as markets and shops are everyday scenes for both virtual scenes and real-world interior designs. However, existing literature on interior scene synthesis mainly focuses on formulating and optimizing residential scenes such as bedrooms, living rooms, etc. Existing literature typically presents a set of relations among objects. It recognizes each furniture object as the smallest unit while optimizing a residential room. However, object relations become less critical in commercial scenes since shelves are often placed next to each other so pre-calculated relations of objects are less needed. Instead, interior designers resort to evaluating how groups of objects perform in commercial scenes, i.e., the smallest unit to be evaluated is a group of objects. This paper presents a system automatically synthesizes market-like commercial scenes in virtual environments. Following the rules of commercial layout design, we parameterize groups of objects as "patterns" contributing to a scene. Each pattern directly yields a human-centric routine locally, provides potential connectivity with other routines, and derives the arrangements of objects concerning itself according to the assigned parameters. In order to optimize a scene, the patterns are iteratively multiplexed to insert new routines or modify existing ones under a set of constraints derived from commercial layout designs. Through extensive experiments, we demonstrate the ability of our framework to generate plausible and practical commercial scenes. |

Yiqian Wu, Jing Zhang, Hongbo Fu, and Xiaogang Jin. LPFF: A Portrait Dataset for Face Generators Across Large Poses. ICCV 2023. 20327-20337. Oct. 2023. |

|

|

Abstract: The creation of 2D realistic facial images and 3D face shapes using generative networks has been a hot topic in recent years. Existing face generators exhibit exceptional performance on faces in small to medium poses (with respect to frontal faces) but struggle to produce realistic results for large poses. The distorted rendering results on large poses in 3D-aware generators further show that the generated 3D face shapes are far from the distribution of 3D faces in reality. We find that the above issues are caused by the training dataset's pose imbalance. In this paper, we present LPFF, a large-pose Flickr face dataset comprised of 19,590 high-quality real large-pose portrait images. We utilize our dataset to train a 2D face generator that can process large-pose face images, as well as a 3D-aware generator that can generate realistic human face geometry. To better validate our pose-conditional 3D-aware generators, we develop a new FID measure to evaluate the 3D-level performance. Through this novel FID measure and other experiments, we show that LPFF can help 2D face generators extend their latent space and better manipulate the large-pose data, and help 3D-aware face generators achieve better view consistency and more realistic 3D reconstruction results. |

Kaiwen Jiang, Shu-Yu Chen, Hongbo Fu, and Lin Gao. NeRFFaceLighting: Implicit and Disentangled Face Lighting Representation Leveraging Generative Prior in Neural Radiance Fields. ACM Transactions on Graphics. 42(3). Article No. 35. June 2023. |

|

|

Abstract: 3D-aware portrait lighting control is an emerging and promising domain, thanks to the recent advance of generative adversarial networks and neural radiance fields. Existing solutions typically try to decouple the lighting from the geometry and appearance for disentangled control with an explicit lighting representation (e.g., Lambertian or Phong). However, they either are limited to a constrained lighting condition (e.g., directional light) or demand a tricky-to-fetch dataset as supervision for the intrinsic compositions (e.g., the albedo). We propose NeRFFaceLighting to explore an implicit representation for portrait lighting based on the pretrained tri-plane representation to address the above limitations. We approach this disentangled lighting-control problem by distilling the shading from the original fused representation of both appearance and lighting (i.e., one tri-plane) to their disentangled representations (i.e., two tri-planes) with the conditional discriminator to supervise the lighting effects. We further carefully design the regularization to reduce the ambiguity of such decomposition and enhance the ability of generalization to unseen lighting conditions. Moreover, our method can be extended to enable 3D-aware real portrait relighting. Through extensive quantitative and qualitative evaluations, we demonstrate the superior 3D-aware lighting control ability of our model compared to alternative and existing solutions. |

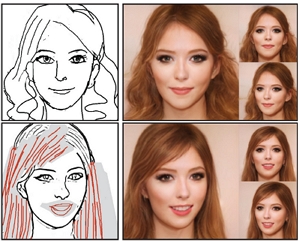

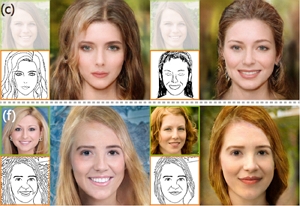

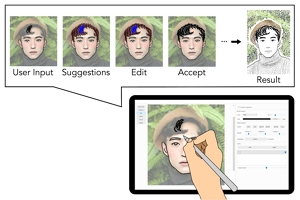

Lin Gao, Feng-Lin Liu, Shu-Yu Chen, Kaiwen Jiang, Chun-Peng Li, Yu-Kun Lai, and Hongbo Fu. SketchFaceNeRF: Sketch-based Facial Generation and Editing in Neural Radiance Fields. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2023. 42(4). Article No. 159. July 2023. |

|

|

Abstract: Realistic 3D facial generation based on Neural Radiance Fields (NeRFs) from 2D sketches benefits various applications. Despite the high realism of free-view rendering results of NeRFs, it is tedious and difficult for artists to achieve detailed 3D control and manipulation. Meanwhile, due to its conciseness and expressiveness, sketching has been widely used for 2D facial image generation and editing. Applying sketching to NeRFs is challenging due to the inherent uncertainty for 3D generation with 2D constraints, a significant gap in content richness when generating faces from sparse sketches, and potential inconsistencies for sequential multi-view editing given only 2D sketch inputs. To address these challenges, we present SketchFaceNeRF, a novel sketch-based 3D facial NeRF generation and editing method, to produce free-view photo-realistic images. To solve the challenge of sketch sparsity, we introduce a Sketch Tri-plane Prediction net to first inject the appearance into sketches, thus generating features given reference images to allow color and texture control. Such features are then lifted into compact 3D tri-planes to supplement the absent 3D information, which is important for improving robustness and faithfulness. However, during editing, consistency for unseen or unedited 3D regions is difficult to maintain due to limited spatial hints in sketches. We thus adopt a Mask Fusion module to transform free-view 2D masks (inferred from sketch editing operations) into the tri-plane space as 3D masks, which guide the fusion of the original and sketch-based generated faces to synthesize edited faces. We further design an optimization approach with a novel space loss to improve identity retention and editing faithfulness. Our pipeline enables users to flexibly manipulate faces from different viewpoints in 3D space, easily designing desirable facial models. Extensive experiments validate that our approach is superior to the state-of-the-art 2D sketch-based image generation and editing approaches in realism and faithfulness. |

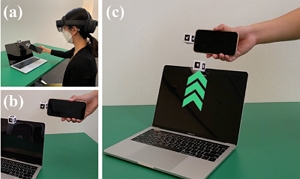

Hui Ye*, Jiaye Leng*, Chufeng Xiao*, Lili Wang, and Hongbo Fu. ProObjAR: Prototyping Spatially-aware Interactions of Smart Objects with AR-HMD. CHI 2023. Article No. 457. April 23 - 28, 2023. |

|

|

Abstract: The rapid advances in technologies have brought new interaction paradigms of smart objects (e.g., digital devices) beyond digital device screens. By utilizing spatial properties, configurations, and movements of smart objects, designing spatial interaction, which is one of the emerging interaction paradigms, efficiently promotes engagement with digital content and physical facility. However, as an important phase of design, prototyping such interactions still remains challenging, since there is no ad-hoc approach for this emerging paradigm. Designers usually rely on methods that require fixed hardware setup and advanced coding skills to script and validate early-stage concepts. These requirements restrict the design process to a limited group of users in indoor scenes. To facilitate the prototyping to general usages, we aim to figure out the design difficulties and underlying needs of current design processes for spatially-aware object interactions by empirical studies. Besides, we explore the design space of the spatial interaction for smart objects and discuss the design space in an input-output spatial interaction model. Based on these findings, we present ProObjAR, an all-in-one novel prototyping system with an Augmented Reality Head Mounted Display (AR-HMD). Our system allows designers to easily obtain the spatial data of smart objects being prototyped, specify spatially-aware interactive behaviors from an input-output event triggering workflow, and test the prototyping results in situ. From the user study, we find that ProObjAR simplifies the design procedure and increases design efficiency to a large extent and thus advancing the development of spatially-aware applications in smart ecosystems. |

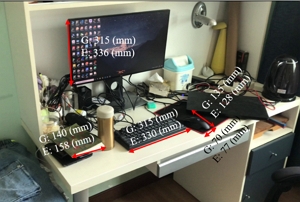

Xiaowei Chen, Xiao Jiang, Jiawei Fang, Shihui Guo, Juncong Lin, Minghong Liao, Guoliang Luo, and Hongbo Fu. DisPad: Flexible On-Body Displacement of Fabric Sensors for Robust Joint-Motion Tracking. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT). 7(1). Article No. 5. March 2023. |

|

|

Abstract: The last few decades have witnessed an emerging trend of wearable soft sensors; however, there are important signal-processing challenges for soft sensors that still limit their practical deployment. They are error-prone when displaced, resulting in significant deviations from their ideal sensor output. In this work, we propose a novel prototype that integrates an elbow pad with a sparse network of soft sensors. Our prototype is fully bio-compatible, stretchable, and wearable. We develop a learning-based method to predict the elbow orientation angle and achieve an average tracking error of 9.82 degrees for single-user multi-motion experiments. With transfer learning, our method achieves the average tracking errors of 10.98 degrees and 11.81 degrees across different motion types and users, respectively. Our core contributions lie in a solution that realizes robust and stable human joint motion tracking across different device displacements. |

Fengyi Fang, Hongwei Zhang, Lishuang Zhan, Shihui Guo, Minying Zhang, Juncong Lin, Yipeng Qin, and Hongbo Fu. Handwriting Velcro: Endowing AR Glasses with Personalized and Posture-adaptive Text Input Using Flexible Touch Sensor. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT). 6(4). Article No. 163. December 2022. |

|

|

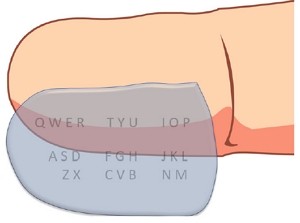

Abstract: The diverse demands required by different input scenarios can hardly be met by the small number of fixed input postures offered by existing solutions. In this paper, we present Handwriting Velcro, a novel text input solution for AR glasses based on flexible touch sensors. The distinct advantage of our system is that it can easily stick to different body parts, thus endowing AR glasses with posture-adaptive handwriting input. We explored the design space of on-body device positions and identified the best interaction positions for various user postures. To flatten users’ learning curves, we adapt our device to the established writing habits of different users by training a 36-character (i.e., A-Z, 0-9) recognition neural network in a human-in-the-loop manner. Such a personalization attempt ultimately achieves a low error rate of 0.005 on average for users with different writing styles. Subjective feedback shows that our solution has a good performance in system practicability and social acceptance. Empirically, we conducted a heuristic study to explore and identify the best interaction Position-Posture Correlation. Experimental results show that our Handwriting Velcro excels similar work [6] and commercial product in both practicality (12.3 WPM) and user-friendliness in different contexts. [Paper] |

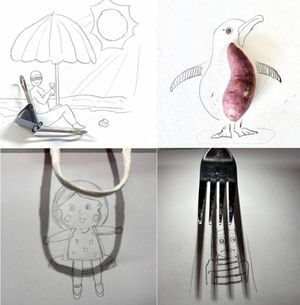

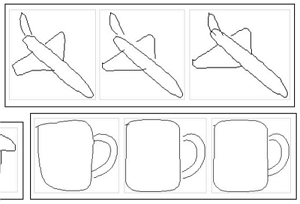

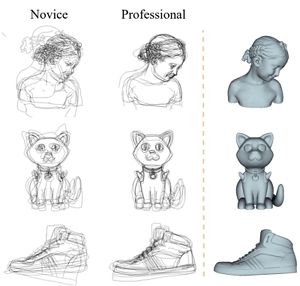

Chufeng Xiao*, Wanchao Su* (joint first author), Jing Liao, Zhouhui Lian, Yi-Zhe Song, and Hongbo Fu. DifferSketching: How Differently Do People Sketch 3D Objects?. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH Asia 2022. 41(6). Article No. 264. December 2022. |

|

|

Abstract: Multiple sketch datasets have been proposed to understand how people draw 3D objects. However, such datasets are often of small scale and cover a small set of objects or categories. In addition, these datasets contain freehand sketches mostly from expert users, making it difficult to compare the drawings by expert and novice users, while such comparisons are critical in informing more effective sketch-based interfaces for either user groups. These observations motivate us to analyze how differently people with and without adequate drawing skills sketch 3D objects. We invited 70 novice users and 38 expert users to sketch 136 3D objects, which were presented as 362 images rendered from multiple views. This leads to a new dataset of 3,620 freehand multi-view sketches, which are registered with their corresponding 3D objects under certain views. Our dataset is an order of magnitude larger than the existing datasets. We analyze the collected data at three levels, i.e., sketch-level, stroke-level, and pixel-level, under both spatial and temporal characteristics, and within and across groups of creators. We found that the drawings by professionals and novices show significant differences at stroke-level, both intrinsically and extrinsically. We demonstrate the usefulness of our dataset in two applications: (i) freehand-style sketch synthesis, and (ii) posing it as a potential benchmark for sketch-based 3D reconstruction. |

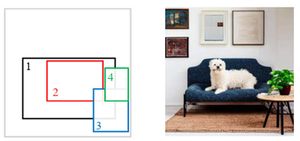

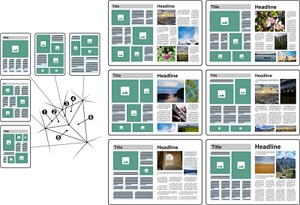

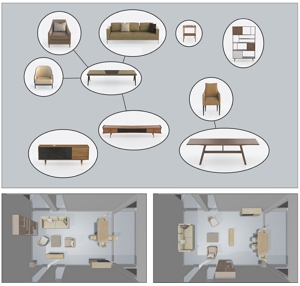

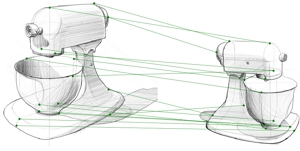

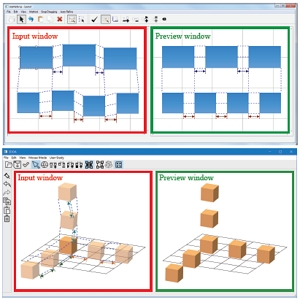

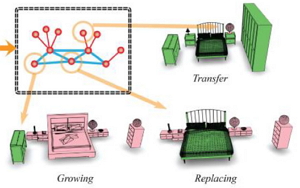

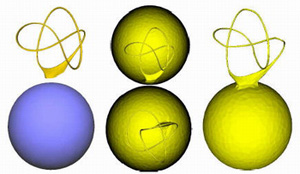

Pengfei Xu, Yifan Li, Zhijin Yang, Weiran Shi, Hongbo Fu, and Hui Huang. Hierarchical Layout Blending with Recursive Optimal Correspondence. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH Asia 2022. 41(6). Article No. 249. December 2022. |

|

|

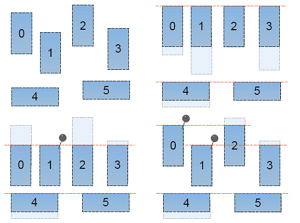

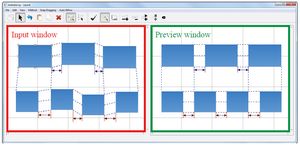

Abstract: We present a novel method for blending hierarchical layouts with semantic labels. The core of our method is a hierarchical structure correspondence algorithm, which recursively finds optimal substructure correspondences, achieving a globally optimal correspondence between a pair of hierarchical layouts. This correspondence is consistent with the structures of both layouts, allowing us to define the union of the layouts’ structures. The resulting compound structure helps extract intermediate layout structures, from which blended layouts can be generated via an optimization approach. The correspondence also defines a similarity measure between layouts in a hierarchically structured view. Our method provides a new way for novel layout creation. The introduced structural similarity measure regularizes the layouts in a hyperspace. We demonstrate two applications in this paper, i.e., exploratory design of novel layouts and sketch-based layout retrieval, and test them on a magazine layout dataset. The effectiveness and feasibility of these two applications are confirmed by the user feedback and the extensive results. |

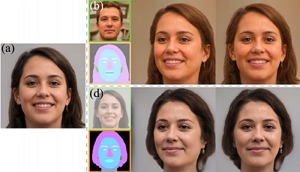

Kaiwen Jiang, Shu-Yu Chen, Feng-Lin Liu, Hongbo Fu, and Lin Gao. NeRFFaceEditing: Disentangled Face Editing in Neural Radiance Fields. ACM SIGGRAPH Asia 2022 Conference Papers. Article No. 31. December 2022. Extended version in IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI). 47(4): 2533 - 2544. April 2025. |

|

|

Abstract: Recent methods for synthesizing 3D-aware face images have achieved rapid development thanks to neural radiance fields, allowing for high quality and fast inference speed. However, existing solutions for editing facial geometry and appearance independently usually require retraining and are not optimized for the recent work of generation, thus tending to lag behind the generation process. To address these issues, we introduce NeRFFaceEditing, which enables editing and decoupling geometry and appearance in the pretrained tri-plane-based neural radiance field while retaining its high quality and fast inference speed. Our key idea for disentanglement is to use the statistics of the tri-plane to represent the high-level appearance of its corresponding facial volume. Moreover, we leverage a generated 3D-continuous semantic mask as an intermediary for geometry editing. We devise a geometry decoder (whose output is unchanged when the appearance changes) and an appearance decoder. The geometry decoder aligns the original facial volume with the semantic mask volume. We also enhance the disentanglement by explicitly regularizing rendered images with the same appearance but different geometry to be similar in terms of color distribution for each facial component separately. Our method allows users to edit via semantic masks with decoupled control of geometry and appearance. Both qualitative and quantitative evaluations show the superior geometry and appearance control abilities of our method compared to existing and alternative solutions. |

Zhiyi Kuang, Yiyang Chen, Hongbo Fu, Kun Zhou, and Youyi Zheng. DeepMVSHair: Deep Hair Modeling from Sparse Views. ACM SIGGRAPH Asia 2022 Conference Papers. Article No. 10. December 2022. |

|

|

Abstract: We present DeepMVSHair, the first deep learning-based method for multi-view hair strand reconstruction. The key component of our pipeline is HairMVSNet, a differentiable neural architecture which represents a spatial hair structure as a continuous 3D hair growing direction field implicitly. Specifically, given a 3D query point, we decide its occupancy value and direction from observed 2D structure features. With the query point’s pixel-aligned features from each input view, we utilize a view-aware transformer encoder to aggregate anisotropic structure features to an integrated representation, which is decoded to yield 3D occupancy and direction at the query point. HairMVSNet effectively gathers multi-view hair structure features and preserves high-frequency details based on this implicit representation. Guided by HairMVSNet, our hair-growing algorithm produces results faithful to input multi-view images. We propose a novel image-guided multi-view strand deformation algorithm to enrich modeling details further. Extensive experiments show that the results by our sparse-view method are comparable to those by state-of-the-art dense multi-view methods and significantly better than those by single-view and sparse-view methods. In addition, our method is an order of magnitude faster than previous multi-view hair modeling methods. |

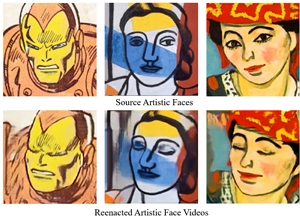

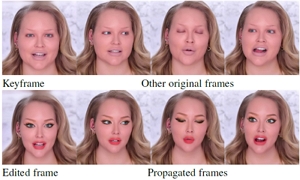

Feng-Lin Liu, Shu-Yu Chen, Yu-Kun Lai, Chunpeng Li, Yue-Ren Jiang, Hongbo Fu, and Lin Gao. DeepFaceVideoEditing: Sketch-based Deep Editing of Face Videos. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2022. 41(4). Article No. 167. August 2022. |

|

|

Abstract: Sketches, which are simple and concise, have been used in recent deep image synthesis methods to allow intuitive generation and editing of facial images. However, it is nontrivial to extend such methods to video editing due to various challenges, ranging from appropriate manipulation propagation and fusion of multiple editing operations to ensure temporal coherence and visual quality. To address these issues, we propose a novel sketch-based facial video editing framework, in which we represent editing manipulations in latent space and propose specific propagation and fusion modules to generate high-quality video editing results based on StyleGAN3. Specifically, we first design an optimization approach to represent sketch editing manipulations by editing vectors, which are propagated to the whole video sequence using a proper strategy to cope with different editing needs. Specifically, input editing operations are classified into two categories: temporally consistent editing and temporally variant editing. The former (e.g., change of face shape) is applied to the whole video sequence directly, while the latter (e.g., change of facial expression or dynamics) is propagated with the guidance of expression or only affects adjacent frames in a given time window. Since users often perform different editing operations in multiple frames, we further present a region-aware fusion approach to fuse diverse editing effects. Our method supports video editing on facial structure and expression movement by sketch, which cannot be achieved by previous works. Both qualitative and quantitative evaluations show the superior editing ability of our system to existing and alternative solutions. |

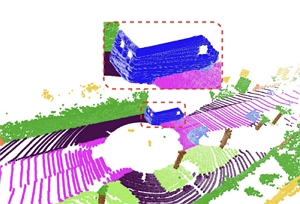

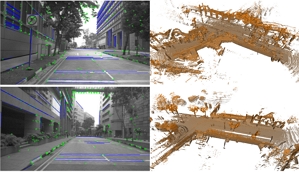

Zeyu Hu*, Xuyang Bai*, Runze Zhang, Xin Wang, Guangyuan Sun, Hongbo Fu, and Chiew-Lan Tai. LiDAL: Inter-frame Uncertainty Based Active Learning for 3D LiDAR Semantic Segmentation. ECCV 2022. October 2022. (Acceptance rate: 28%) |

|

|

Abstract: We propose LiDAL, a novel active learning method for 3D LiDAR semantic segmentation by exploiting inter-frame uncertainty among LiDAR frames. Our core idea is that a well-trained model should generate robust results irrespective of viewpoints for scene scanning and thus the inconsistencies in model predictions across frames provide a very reliable measure of uncertainty for active sample selection. To implement this uncertainty measure, we introduce new inter-frame divergence and entropy formulations, which serve as the metrics for active selection. Moreover, we demonstrate additional performance gains by predicting and incorporating pseudo-labels, which are also selected using the proposed inter-frame uncertainty measure. Experimental results validate the effectiveness of LiDAL: we achieve 95% of the performance of fully supervised learning with less than 5% of annotations on the SemanticKITTI and nuScenes datasets, outperforming state-of-the-art active learning methods. |

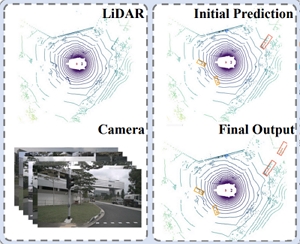

Xuyang Bai*, Zeyu Hu*, Xinge Zhu, Qingqiu Huang, Yilun Chen, Hongbo Fu, and Chiew-Lan Tai. TransFusion: Robust LiDAR-Camera Fusion for 3D Object Detection with Transformers. CVPR 2022. June 2022. (Acceptance rate: 25.3%) |

|

|

Abstract: LiDAR and camera are two important sensors for 3D object detection in autonomous driving. Despite the increasing popularity of sensor fusion in this field, the robustness against inferior image conditions, e.g., bad illumination and sensor misalignment, is under-explored. Existing fusion methods are easily affected by such conditions, mainly due to a hard association of LiDAR points and image pixels, established by calibration matrices. We propose TransFusion, a robust solution to LiDAR-camera fusion with a soft-association mechanism to handle inferior image conditions. Specifically, our TransFusion consists of convolutional backbones and a detection head based on a transformer decoder. The first layer of the decoder predicts initial bounding boxes from a LiDAR point cloud using a sparse set of object queries, and its second decoder layer adaptively fuses the object queries with useful image features, leveraging both spatial and contextual relationships. The attention mechanism of the transformer enables our model to adaptively determine where and what information should be taken from the image, leading to a robust and effective fusion strategy. We additionally design an image-guided query initialization strategy to deal with objects that are difficult to detect in point clouds. TransFusion achieves state-of-the-art performance on large-scale datasets. We provide extensive experiments to demonstrate its robustness against degenerated image quality and calibration errors. We also extend the proposed method to the 3D tracking task and achieve the 1st place in the leaderboard of nuScenes tracking, showing its effectiveness and generalization capability. |

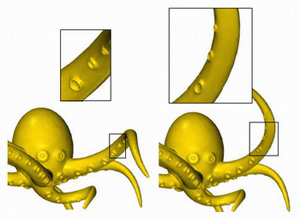

Keyu Wu, Yifan Ye, Lingchen Yang, Hongbo Fu, Kun Zhou, and Youyi Zheng. NeuralHDHair: Automatic High-fidelity Hair Modeling from a Single Image Using Implicit Neural Representations. CVPR 2022. June 2022. (Acceptance rate: 25.3%) |

|

|

Abstract: Undoubtedly, high-fidelity 3D hair plays an indispensable role in digital humans. However, existing monocular hair modeling methods are either tricky to deploy in digital systems (e.g., due to their dependence on complex user interactions or large databases) or can produce only a coarse geometry. In this paper, we introduce NeuralHDHair, a flexible, fully automatic system for modeling high-fidelity hair from a single image. The key enablers of our system are two carefully designed neural networks: an IRHairNet (Implicit representation for hair using neural network) for inferring high-fidelity 3D hair geometric features (3D orientation field and 3D occupancy field) hierarchically and a GrowingNet(Growing hair strands using neural network) to efficiently generate 3D hair strands in parallel. Specifically, we perform a coarse-to-fine manner and propose a novel voxel-aligned implicit function (VIFu) to represent the global hair feature, which is further enhanced by the local details extracted from a hair luminance map. To improve the efficiency of a traditional hair growth algorithm, we adopt a local neural implicit function to grow strands based on the estimated 3D hair geometric features. Extensive experiments show that our method is capable of constructing a high-fidelity 3D hair model from a single image, both efficiently and effectively, and achieves the-state-of-the-art performance. [Paper] |

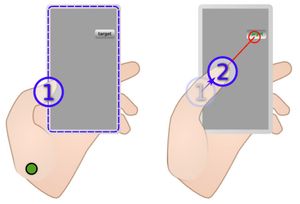

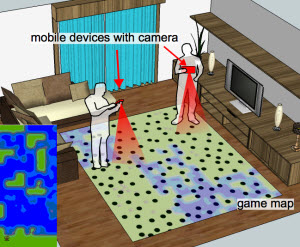

Hui Ye* and Hongbo Fu. ProGesAR: Mobile AR Prototyping for Proxemic and Gestural Interactions with Real-world IoT Enhanced Spaces. CHI 2022. Article No. 130. April 30 - May 5 2022. |

|

|

Abstract:Real-world IoT enhanced spaces involve diverse proximity- and gesture-based interactions between users and IoT devices/objects. Prototyping such interactions benefits various applications like the conceptual design of ubicomp space. AR (Augmented Reality) prototyping provides a flexible way to achieve early-stage designs by overlaying digital contents on real objects or environments. However, existing AR prototyping approaches have focused on prototyping AR experiences or context-aware interactions from the first-person view instead of full-body proxemic and gestural (proges for short) interactions of real users in the real world. In this work, we conducted interviews to figure out the challenges of prototyping pro-ges interactions in real-world IoT enhanced spaces. Based on the findings, we present ProGesAR, a mobile AR tool for prototyping pro-ges interactions of a subject in a real environment from a third-person view, and examining the prototyped interactions from both the first- and third- person views. Our interface supports the effects of virtual assets dynamically triggered by a single subject, with the triggering events based on four features: location, orientation, gesture, and distance. We conduct a preliminary study by inviting participants to prototype in a freeform manner using ProGesAR. The early-stage findings show that with ProGesAR, users can easily and quickly prototype their design ideas about pro-ges interactions. |

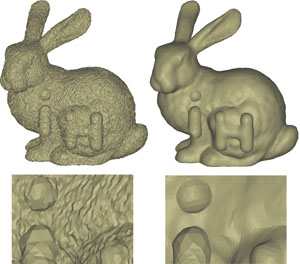

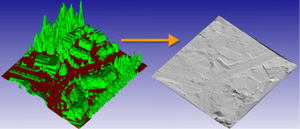

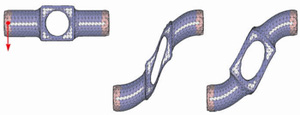

Yuefan Shen, Hongbo Fu, Zhongshuo Du, Xiang Chen, Evgeny Burnaev, Denis Zorin, Kun Zhou, and Youyi Zheng. GCN-Denoiser: Mesh Denoising with Graph Convolutional Networks. ACM Transactions on Graphics (TOG). 41(1): Article No. 8. February 2022. |

|

|

Abstract: In this paper, we present GCN-Denoiser, a novel feature-preserving mesh denoising method based on graph convolutional networks (GCNs). Unlike previous learning-based mesh denoising methods that exploit hand-crafted or voxel-based representations for feature learning, our method explores the structure of a triangular mesh itself and introduces a graph representation followed by graph convolution operations in the dual space of triangles. We show such a graph representation naturally captures the geometry features while being lightweight for both training and inference. To facilitate effective feature learning, our network exploits both static and dynamic edge convolutions, which allow us to learn information from both the explicit mesh structure and potential implicit relations among unconnected neighbors. To better approximate an unknown noise function, we introduce a cascaded optimization paradigm to progressively regress the noise-free facet normals with multiple GCNs. GCN-Denoiser achieves the new state-of-the-art results in multiple noise datasets, including CAD models often containing sharp features and raw scan models with real noise captured from different devices. |

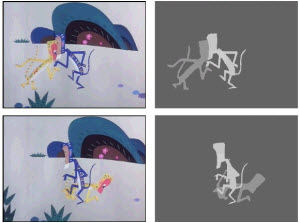

Chufeng Xiao*, Deng Yu*, Xiaoguang Han, Youyi Zheng, and Hongbo Fu. SketchHairSalon: Deep Sketch-based Hair Image Synthesis. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH Asia 2021. 40(6): Article No. 216. December 2021. |

|

|

Abstract: Recent deep generative models allow real-time generation of hair images from sketch inputs. Existing solutions often require a user-provided binary mask to specify a target hair shape. This not only costs users extra labor but also fails to capture complicated hair boundaries. Those solutions usually encode hair structures via orientation maps, which, however, are not very effective to encode complex structures.We observe that colored hair sketches already implicitly define target hair shapes as well as hair appearance and are more flexible to depict hair structures than orientation maps. Based on these observations, we present SketchHairSalon, a two-stage framework for generating realistic hair images directly from freehand sketches depicting desired hair structure and appearance. At the first stage, we train a network to predict a hair matte from an input hair sketch, with an optional set of nonhair strokes. At the second stage, another network is trained to synthesize the structure and appearance of hair images from the input sketch and the generated matte. To make the networks in the two stages aware of longterm dependency of strokes, we apply self-attention modules to them. To train these networks, we present a new moderately large dataset, containing diverse hairstyles with annotated hair sketch-image pairs and corresponding hair mattes. Two efficient methods for sketch completion are proposed to automatically complete repetitive braided parts and hair strokes, respectively, thus reducing the workload of users. Based on the trained networks and the two sketch completion strategies, we build an intuitive interface to allow even novice users to design visually pleasing hair images exhibiting various hair structures and appearance via freehand sketches. The qualitative and quantitative evaluations show the advantages of the proposed system over the existing or alternative solutions. [Paper, Video (@Vimeo), Video (@Youtube), Video (by "Two Minute Papers"), Code, Project] |

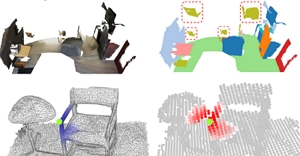

Zeyu Hu*, Xuyang Bai*, Jiaxiang Shang, Runze Zhang, Jiayu Dong, Xin Wang, Guangyuan Sun, Hongbo Fu, and Chiew-Lan Tai. VMNet: Voxel-Mesh Network for Geodesic-Aware 3D Semantic Segmentation. ICCV 2021. Octoboer 2021. (Acceptance rate: 25.9%) Oral Presentation |

|

|

Abstract: In recent years, sparse voxel-based methods have become the state-of-the-arts for 3D semantic segmentation of indoor scenes, thanks to the powerful 3D CNNs. Nevertheless, being oblivious to the underlying geometry, voxel-based methods suffer from ambiguous features on spatially close objects and struggle with handling complex and irregular geometries due to the lack of geodesic information. In view of this, we present Voxel-Mesh Network (VMNet), a novel 3D deep architecture that operates on the voxel and mesh representations leveraging both the Euclidean and geodesic information. Intuitively, the Euclidean information extracted from voxels can offer contextual cues representing interactions between nearby objects, while the geodesic information extracted from meshes can help separate objects that are spatially close but have disconnected surfaces. To incorporate such information from the two domains, we design an intra-domain attentive module for effective feature aggregation and an inter-domain attentive module for adaptive feature fusion. Experimental results validate the effectiveness of VMNet: specifically, on the challenging ScanNet dataset for large-scale segmentation of indoor scenes, it outperforms the state-of-the-art SparseConvNet and MinkowskiNet (74.6% vs 72.5% and 73.6% in mIoU) with a simpler network structure (17M vs 30M and 38M parameters). |

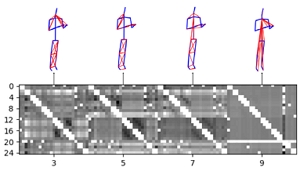

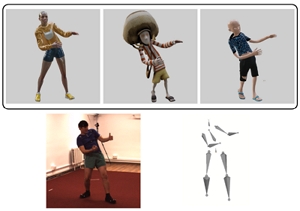

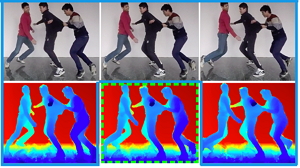

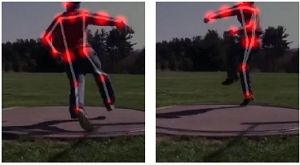

Jingyuan Liu*, Mingyi Shi, Qifeng Chen, Hongbo Fu, and Chiew-Lan Tai. Normalized Human Pose Features for Human Action Video Alignment. ICCV 2021. Octoboer 2021. (Acceptance rate: 25.9%) Oral Presentation

|

|

|

Abstract: We present a novel approach for extracting human pose features from human action videos. The goal is to let the pose features capture only the poses of the action while being invariant to other factors, including video backgrounds, the video subject's anthropometric characteristics and viewpoints. Such human pose features facilitate the comparison of pose similarity and can be used for down-stream tasks, such as human action video alignment and pose retrieval. The key to our approach is to first normalize the poses in the video frames by retargeting the poses onto a pre-defined 3D skeleton to not only disentangle subject physical features, such as bone lengths and ratios, but also to unify global orientations of the poses. Then the normalized poses are mapped to a pose embedding space of high-level features, learned via unsupervised metric learning. We evaluate the effectiveness of our normalized features both qualitatively by visualizations, and quantitatively by a video alignment task on the Human3.6M dataset and an action recognition task on the Penn Action dataset. . |

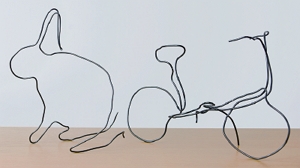

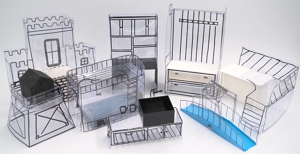

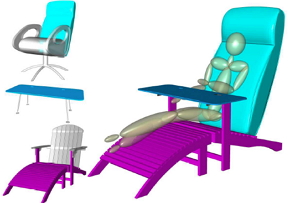

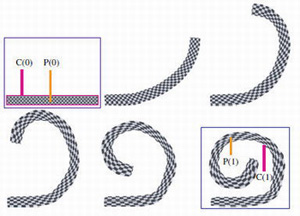

Zhijin Yang, Pengfei Xu, Hongbo Fu, and Hui Huang. WireRoom: Model-guided Explorative Design of Abstract Wire Art. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2021. 40(4). Article No. 128. August 2021. (Acceptance rate: 35.0%) |

|

|

Abstract: We present WireRoom, a computational framework for the intelligent design of abstract 3D wire art to depict a given 3D model. Our algorithm generates a set of 3D wire shapes from the 3D model with informative, visually pleasing, and concise structures. It is achieved by solving a dynamic travelling salesman problem on the surface of the 3D model with a multi-path expansion approach. We introduce a novel explorative computational design procedure by taking the generated wire shapes as candidates, avoiding manual design of the wire shape structure. We compare our algorithm with a baseline method and conduct a user study to investigate the usability of the framework and the quality of the produced wire shapes. The results of the comparison and user study confirm that our framework is effective for producing informative, visually pleasing, and concise wire shapes. |

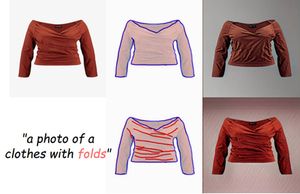

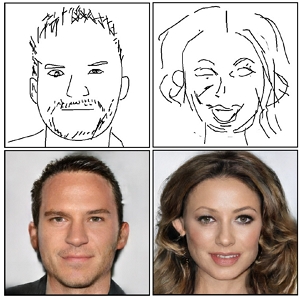

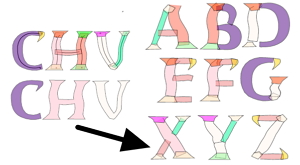

Shu-Yu Chen, Feng-Lin Liu, Yu-Kun Lai, Paul Rosin, Chun-Peng Li, Hongbo Fu, and Lin Gao. DeepFaceEditing: Deep Face Generation and Editing with Disentangled Geometry and Appearance Control. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2021. 40(4). Article No. 90. August 2021. (Acceptance rate: 35.0%) |

|

|

Abstract: Recent facial image synthesis methods have been mainly based on conditional generative models. Sketch-based conditions can effectively describe the geometry of faces, including the contours of facial components, hair structures, as well as salient edges (e.g., wrinkles) on face surfaces but lack effective control of appearance, which is influenced by color, material, lighting condition, etc. To have more control of generated results, one possible approach is to apply existing disentangling works to disentangle face images into geometry and appearance representations. However, existing disentangling methods are not optimized for human face editing, and cannot achieve fine control of facial details such as wrinkles. To address this issue, we propose DeepFaceEditing, a structured disentanglement framework specifically designed for face images to support face generation and editing with disentangled control of geometry and appearance. We adopt a local-to-global approach to incorporate the face domain knowledge: local component images are decomposed into geometry and appearance representations, which are fused consistently using a global fusion module to improve generation quality.We exploit sketches to assist in extracting a better geometry representation, which also supports intuitive geometry editing via sketching. The resulting method can either extracts the geometry and appearance representations from face images, or directly extracts the geometry representation from face sketches. Such representations allow users to easily edit and synthesize face images, with decoupled control of their geometry and appearance. Both qualitative and quantitative evaluations show the superior detail and appearance control abilities of our method compared to state-of-the-art methods. |

Shi-Sheng Huang, Ze-Yu Ma, Tai-Jiang Mu, Hongbo Fu, and Shi-Min Hu. Supervoxel Convolution for Online 3D Semantic Segmentation. ACM Transactions on Graphics (TOG). 43(3). Article No. 34. June 2021. |

|

|

Abstract: Online 3D semantic segmentation, which aims to perform real-time 3D scene reconstruction along with semantic segmentation, is an important but challenging topic. A key challenge is to strike a balance between efficiency and segmentation accuracy. There are very few deep learning based solutions to this problem, since the commonly used deep representations based on volumetric-grids or points do not provide efficient 3D representation and organization structure for online segmentation. Observing that on-surface supervoxels, i.e., clusters of on-surface voxels, provide a compact representation of 3D surfaces and brings efficient connectivity structure via supervoxel clustering, we explore a supervoxel-based deep learning solution for this task. To this end, we contribute a novel convolution operation (SVConv) directly on supervoxels. SVConv can efficiently fuse the multi-view 2D features and 3D features projected on supervoxels during the online 3D reconstruction, and leads to an effective supervoxel-based convolutional neural network, termed as Supervoxel-CNN, enabling 2D-3D joint learning for 3D semantic prediction. With the Supervoxel-CNN, we propose a clustering-then-prediction online 3D semantic segmentation approach. The extensive evaluations on the public 3D indoor scene datasets show that our approach significantly outperforms the existing online semantic segmentation systems in terms of efficiency or accuracy. |

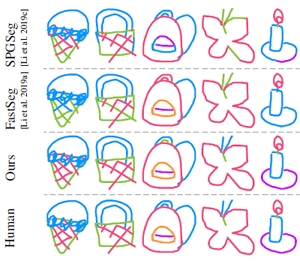

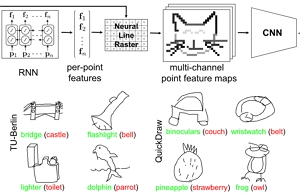

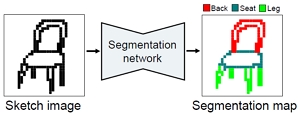

Lumin Yang, Jiajie Zhuang, Hongbo Fu, Xiangzhi Wei, Kun Zhou, and Youyi Zheng. SketchGNN: Semantic Sketch Segmentation with Graph Neural Networks. ACM Transactions on Graphics (TOG). 40(3). Article No. 28. Aug. 2021. |

|

|

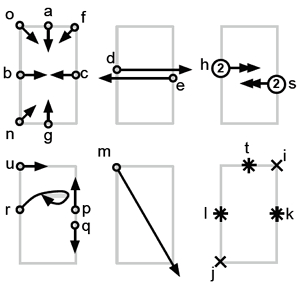

Abstract: We introduce SketchGNN, a convolutional graph neural network for semantic segmentation and labeling of freehand vector sketches. We treat an input stroke-based sketch as a graph, with nodes representing the sampled points along input strokes and edges encoding the stroke structure information. To predict the per-node labels, our SketchGNN uses graph convolution and a static-dynamic branching network architecture to extract the features at three levels, i.e., point-level, stroke-level, and sketch-level. SketchGNN significantly improves the accuracy of the state-of-the-art methods for semantic sketch segmentation (by 11.2% in the pixel-based metric and 18.2% in the component-based metric over a large-scale challenging SPG dataset) and has magnitudes fewer parameters than both image-based and sequence-based methods. |

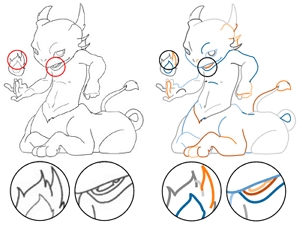

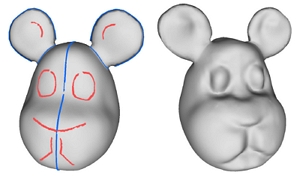

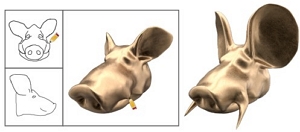

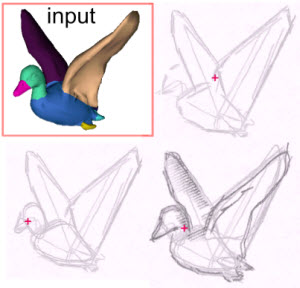

Zhongjin Luo, Jie Zhou*, Heming Zhu, Dong Du, Xiaoguang Han, and Hongbo Fu. SimpModeling: Sketching Implicit Field to Guide Mesh Modeling for 3D Animalmorphic Head Design. UIST 2021. October 2021. (Acceptance rate: 25.9%). |

|

|

Abstract: Head shapes play an important role in designing 3D virtual characters.In this work, we propose a novel sketch-based interface for modeling animalmorphic heads - a very popular kind of heads in character design. Although sketching provides an easy way to depict desired shapes, it is challenging to infer dense geometric information from sparse line drawings. Recently, deepnet-based approaches have been taken to address this challenge and try to produce rich geometric details from very few strokes. However, which such methods reduce users' workload, they would cause less controllability of target shapes. This is mainly due to the uncertainty of neural prediction. Our system tackles this issue and provides more controllability from three aspects: 1) we separate coarse shape design and geometric detail specification into two stages and respectively provide different sketching ways; 2) in coarse model designing, sketches are used for both shape inference and geometric constraints to determine global geometry; 3) in both stages, we use the advanced implicit-based shape inference methods, which have better ability to handle the domain gap between freehand sketches and synthetic ones used for training. Experimental results confirm the effectiveness of our method and the usability of our interactive system. We also contribute a dataset of high-quality 3D animal heads, which are manually created by artists. [Paper, Video, Code, Presentation, Project] |

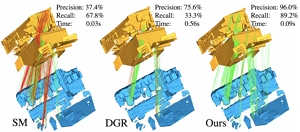

Xuyang Bai*, Zixin Luo, Lei Zhou, Hongkai Chen, Lei Li*, Zeyu Hu*, Hongbo Fu, and Chiew-Lan Tai. PointDSC: Robust Point Cloud Registration using Deep Spatial Consistency. CVPR 2021. June 2021. (Acceptance rate: 27.0%). |

|

|

Abstract: Removing outlier correspondences is one of the critical steps for successful feature-based point cloud registration. Despite the increasing popularity of introducing deep learning techniques in this field, spatial consistency, which is essentially established by a Euclidean transformation between point clouds, has received almost no individual attention in existing learning frameworks. In this paper, we present PointDSC, a novel deep neural network that explicitly incorporates spatial consistency for pruning outlier correspondences. First, we propose a nonlocal feature aggregation module, weighted by both feature and spatial coherence, for feature embedding of the input correspondences. Second, we formulate a differentiable spectral matching module, supervised by pairwise spatial compatibility, to estimate the inlier confidence of each correspondence from the embedded features. With modest computation cost, our method outperforms the state-of-the-art handcrafted and learning-based outlier rejection approaches on several real-world datasets by a significant margin. We also show its wide applicability by combining PointDSC with different 3D local descriptors. |

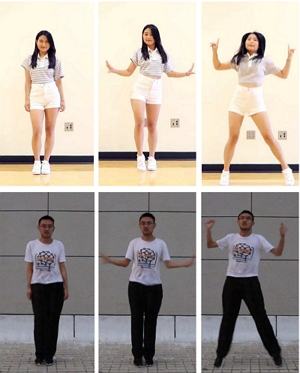

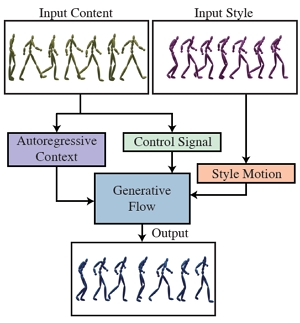

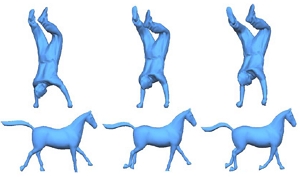

Yu-Hui Wen, Zhipeng Yang (joint first author), Hongbo Fu, Lin Gao, Yannan Sun, and Yong-Jin Liu. Autoregressive Stylized Motion Synthesis with Generative Flow. CVPR 2021. June 2021. (Acceptance rate: 27.0%). |

|

|

Abstract: Style-based motion synthesis is an important problem in many computer graphics and computer vision applications, including human animation, games, and robotics. Most existing deep learning methods for this problem are supervised and trained by registered motion pairs. In addition, these methods are often limited to yielding a deterministic output, given a pair of style and content motions. In this paper, we propose an unsupervised approach for motion style transfer by synthesizing stylized motions autoregressively using a generative flow modelM. Mis trained to maximize the exact likelihood of a collection of unlabeled motions, based on an autoregressive context of poses in previous frames and a control signal representing the movement of a root joint. Thanks to invertible flow transformations, latent codes that encode deep properties of motion styles are efficiently inferred byM. By combining the latent codes (from an input style motion S) with the autoregressive context and control signal (from an input content motion C),Moutputs a stylized motion which transfers style from S to C. Moreover, our model is probabilistic and is able to generate various plausible motions with a specific style. We evaluate the proposed model on motion capture datasets containing different human motion styles. Experiment results show that our model outperforms the state-of-the-art methods, despite not requiring manually labeled training data. |

Ying Jiang, Congyi Zhang, Hongbo Fu, Alberto Cannavo, Fabrizio Lamberti, Henry Lau, and Wenping Wang. HandPainter - 3D Sketching in VR with Hand-based Physical Proxy. CHI 2021. May 2021. (Acceptance rate: 26.3%). |

|

|

Abstract: 3D sketching in virtual reality (VR) enables users to create 3D virtual objects intuitively and immersively. However, previous studies showed that mid-air drawing may lead to inaccurate sketches. To address this issue, we propose to use one hand as a canvas proxy and the index finger of the other hand as a 3D pen. To this end, we first performed a formative study to compare two-handed interaction with tablet-pen interaction for VR sketching. Based on the findings of this study, we designed HandPainter, a VR sketching system which focuses on the direct use of two hands for 3D sketching without requesting any tablet, pen, or VR controller. Our implementation is based on a pair of VR gloves, which provide hand tracking and gesture capture. We devised a set of intuitive gestures to control various functionalities required during 3D sketching, such as canvas panning and drawing positioning. We showed the effectiveness of HandPainter by presenting a number of sketching results and discussing the outcomes of a user study-based comparison with mid-air drawing and tablet-based sketching tools. |

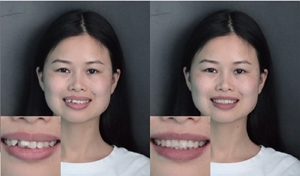

Lingchen Yang, Zefeng Shi, Hongbo Fu, Yiqian Wu, Kun Zhou, and Youyi Zheng. iOrthoPredictor: Deep Prediction of Teeth Alignment in Single Images. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH Asia 2020. November/December 2020. (Acceptance rate: xx.x%) |

|

|

Abstract: In this paper, we present iOrthoPredictor, a novel system to visually predict the teeth alignment effect in a single photograph. Our system takes a frontal face image of a patient with visible malpositioned teeth along with the corresponding 3D teeth model as input, and generates a facial image with aligned teeth, mimicking the real orthodontic treatment effect. The key enabler of our method is an effective disentanglement of an explicit representation of the teeth geometry from the in-mouth appearance, where the accuracy of teeth geometry transformation is ensured by the 3D teeth model while the in-mouth appearance is modeled as a latent variable. The disentanglement enables us to achieve fine-scale geometry control over the alignment while retaining the original teeth attributes and lighting conditions. The whole pipeline consists of three deep neural networks: a UNet architecture to explicitly extract the 2D teeth silhouette maps representing the teeth geometry in the input photo, an encoder-decoder based generative model to synthesize the in-mouth appearance conditional on the teeth geometry, and a novel multilayer perceptron (MLP) based network to predict the aligned 3D teeth model. Extensive experimental results and a user study demonstrate that iOrthoPredictor is effective in generating high-quality visual prediction of teeth alignment effect, and readily applicable to industrial orthodontic treatments. |

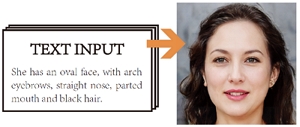

Shu-Yu Chen, Wanchao Su* (joint first author), Lin Gao, Shihong Xia, and Hongbo Fu. DeepFaceDrawing: Deep Generation of Face Images from Sketches. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2020. 39(4). Article 72. July 2020. (Acceptance rate: xx.x%) |

|

|

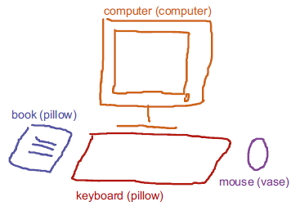

Abstract: Recent deep image-to-image translation techniques allow fast generation of face images from freehand sketches. However, existing solutions tend to overfit to sketches, thus requiring professional sketches or even edge maps as input. To address this issue, our key idea is to implicitly model the shape space of plausible face images and synthesize a face image in this space to approximate an input sketch. We take a local-to-global approach. We first learn feature embeddings of key face components, and push corresponding parts of input sketches towards underlying component manifolds defined by the feature vectors of face component samples. We also propose another deep neural network to learn the mapping from the embedded component features to realistic images with multi-channel feature maps as intermediate results to improve the information flow. Our method essentially uses input sketches as soft constraints and is thus able to produce high-quality face images even from rough and/or incomplete sketches. Our tool is easy to use even for non-artists, while still supporting fine-grained control of shape details. Both qualitative and quantitative evaluations show the superior generation ability of our system to existing and alternative solutions. The usability and expressiveness of our system are confirmed by a user study. |

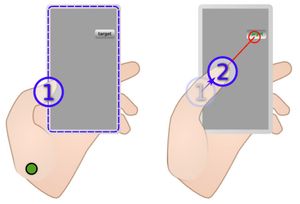

Hui Ye*, Kin Chung Kwan* (joint first author), Wanchao Su*, and Hongbo Fu. ARAnimator: In-situ Character Animation in Mobile AR with User-defined Motion Gestures. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH 2020. 39(4). Article 83. July 2020. (Acceptance rate: xx.x%) |

|

|

Abstract: Creating animated virtual AR characters closely interacting with real environments is interesting but difficult. Existing systems adopt video see-through approaches to indirectly control a virtual character in mobile AR, making close interaction with real environments not intuitive. In this work we use an AR-enabled mobile device to directly control the position and motion of a virtual character situated in a real environment. We conduct two guessability studies to elicit user-defined motions of a virtual character interacting with real environments, and a set of user-defined motion gestures describing specific character motions. We found that an SVM-based learning approach achieves reasonably high accuracy for gesture classification from the motion data of a mobile device. We present ARAnimator, which allows novice and casual animation users to directly represent a virtual character by an AR-enabled mobile phone and control its animation in AR scenes using motion gestures of the device, followed by animation preview and interactive editing through a video see-through interface. Our experimental results show that with ARAnimator, users are able to easily create in-situ character animations closely interacting with different real environments. |

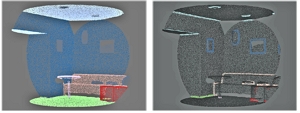

Sheng Yang, Beichen Li, Yanpei Cao, Hongbo Fu, Yukun Lai, Leif Kobbelt, and Shi-Min Hu. Noise-Resilient Reconstruction of Panoramas and 3D Scenes using Robot-Mounted Unsynchronized Commodity RGB-D Cameras. ACM Transactions on Graphics (TOG) - presented at SIGGRAPH 2020. 39(5). Article No. 152. July 2020. |

|

|

Abstract: We present a two-stage approach to first constructing 3D panoramas and then stitching them for noise-resilient reconstruction of large-scale indoor scenes. Our approach requires multiple unsynchronized RGB-D cameras, mounted on a robot platform which can perform in-place rotations at different locations in a scene. Such cameras rotate on a common (but unknown) axis, which provides a novel perspective for coping with unsynchronized cameras, without requiring sufficient overlap of their Field-of-View (FoV). Based on this key observation, we propose novel algorithms to track these cameras simultaneously. Furthermore, during the integration of raw frames onto an equirectangular panorama, we derive uncertainty estimates from multiple measurements assigned to the same pixels. This enables us to appropriately model the sensing noise and consider its influence, so as to achieve better noise resilience, and improve the geometric quality of each panorama and the accuracy of global inter-panorama registration. We evaluate and demonstrate the performance of our proposed method for enhancing the geometric quality of scene reconstruction from both real-world and synthetic scans. [Paper] |

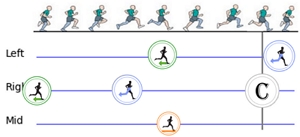

Jingyuan Liu*, Hongbo Fu, and Chiew-Lan Tai. PoseTween: Pose-driven Tween Animation. UIST 2020. 971-804. (Acceptance rate: 21.6%). October 2020. |

|

|

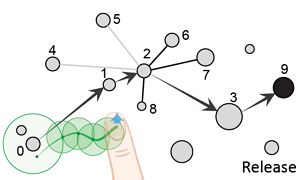

Abstract: Augmenting human action videos with visual effects often requires professional tools and skills. To make this more accessible by novice users, existing attempts have focused on automatically adding visual effects to faces and hands, or let virtual objects strictly track certain body parts, resulting in rigid-looking effects. We present PoseTween, an interactive system that allows novice users to easily add vivid virtual objects with their movement interacting with a moving subject in an input video. Our key idea is to leverage the motion of the subject to create pose-driven tween animations of virtual objects. With our tool, a user only needs to edit the properties of a virtual object with respect to the subject’s movement at keyframes, and the object is associated with certain body parts automatically. The properties of the object at intermediate frames are then determined by both the body movement and the interpolated object keyframe properties, producing natural object movements and interactions with the subject. We design a user interface to facilitate editing of keyframes and previewing animation results. Our user study shows that PoseTween significantly requires less editing time and fewer keyframes than using the traditional tween animation in making pose-driven tween animations for novice users. [Paper, Video, Presentation] |

Zeyu Hu*, Mingmin Zhen, Xuyang Bai*, Hongbo Fu, and Chiew-Lan Tai. JSENet: Joint Semantic Segmentation and Edge Detection Network for 3D Point Clouds. ECCV 2020. (Acceptance rate: 27.0%). August 2020. |

|

|

Abstract: Semantic segmentation and semantic edge detection can be seen as two dual problems with close relationships in computer vision. Despite the fast evolution of learning-based 3D semantic segmentation methods, little attention has been drawn to the learning of 3D semantic edge detectors, even less to a joint learning method for the two tasks. In this paper, we tackle the 3D semantic edge detection task for the first time and present a new two-stream fully-convolutional network that jointly performs the two tasks. In particular, we design a joint refinement module that explicitly wires region information and edge information to improve the performances of both tasks. Further, we propose a novel loss function that encourages the network to produce semantic segmen- tation results with better boundaries. Extensive evaluations on S3DIS and ScanNet datasets show that our method achieves on par or better performance than the state-of-the-art methods for semantic segmenta- tion and outperforms the baseline methods for semantic edge detection. |

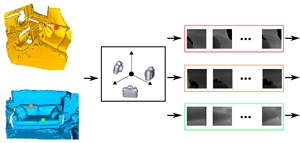

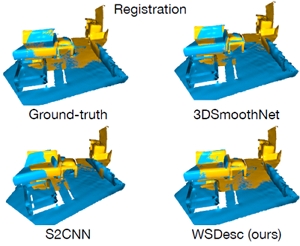

Lei Li*, Siyu Zhu, Hongbo Fu, Ping Tan, and Chiew-Lan Tai. End-to-End Learning Local Multi-view Descriptors for 3D Point Clouds. CVPR 2020. (Acceptance rate: 22.4%). June 2020. |

|

|

Abstract: In this work, we propose an end-to-end framework to learn local multi-view descriptors for 3D point clouds. Toadopt a similar multi-view representation, existing studies use hand-crafted viewpoints for rendering in a preprocessing stage, which is detached from the subsequent descriptor learning stage. In our framework, we integrate the multi-view rendering into neural networks by using a differentiable renderer, which allows the viewpoints to be optimizable parameters for capturing more informative local context of interest points. To obtain discriminative descriptors, we also design a soft-view pooling module to attentively fuse convolutional features across views. Extensive experiments on existing 3D registration benchmarks show that our method outperforms existing local descriptors both quantitatively and qualitatively. |

Xuyang Bai*, Zixin Luo, Lei Zhou, and Hongbo Fu, Long Quan, and Chiew-Lan Tai. D3Feat: Joint Learning of Dense Detection and Description of 3D Local Features. CVPR 2020. (Acceptance rate: 22.4%). June 2020. Oral Presentation |

|

|

Abstract: A successful point cloud registration often lies on robust establishment of sparse matches through discriminative 3D local features. Despite the fast evolution of learning-based 3D feature descriptors, little attention has been drawn to the learning of 3D feature detectors, even less for a joint learning of the two tasks. In this paper, we leverage a 3D fully convolutional network for 3D point clouds, and propose a novel and practical learning mechanism that densely predicts both a detection score and a description feature for each 3D point. In particular, we propose a keypoint selection strategy that overcomes the inherent density variations of 3D point clouds, and further propose a self-supervised detector loss guided by the on-the-fly feature matching results during training. Finally, our method achieves state-of-the-art results in both indoor and outdoor scenarios, evaluated on 3DMatch and KITTI datasets, and shows its strong generalization ability on the ETH dataset. Towards practical use, we show that by adopting a reliable feature detector, sampling a smaller number of features is sufficient to achieve accurate and fast point cloud alignment. |

Pui Chung Wong*, Kening Zhu, Xing-Dong Yang, and Hongbo Fu. Exploring eyes-free bezel-initiated swipe on round

smartwatches. CHI 2020. (Acceptance rate: 24.31%). April 2020. |

|

|

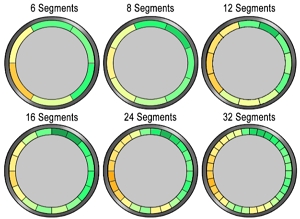

Abstract: Bezel-based gestures expand the interaction space of touch-screen devices (e.g., smartphones and smartwatches). Existing works have mainly focused on bezel-initiated swipe (BIS) on square screens. To investigate the usability of BIS onround smartwatches, we design six different circular bezellayouts, by dividing the bezel into 6, 8, 12, 16, 24, and 32 seg-ments. We evaluate the user performance of BIS on these sixlayouts in an eyes-free situation, since it can potentially bene-fit various usage scenarios, e.g., navigation with smartglasses.The results show that the performance of BIS is highly orien-tation dependent, and varies significantly among users Usingthe Support-Vector-Machine (SVM) model significantly in-creases the accuracy on 6-, 8-, 12-, and 16-segment layouts.We then compare the performance of personal and generalSVM models, and find that personal models significantly im-prove the accuracy for 8-, 12-, 16-, and 24-segment layouts.Lastly, we discuss the potential smartwatch applications en-abled by the BIS. |

Changqing Zou, Haoran Mo, Chengying Gao, Ruofei Du, and Hongbo Fu. Language-based Colorization of Scene Sketches. ACM Transactions on Graphics (TOG) special issue: Proceedings of ACM SIGGRAPH Asia 2019. 38(6). Article 233. November 2019. (Acceptance rate: 30.0%) |

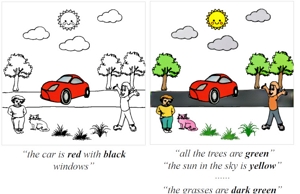

|

|