ACM Transaction on Graphics (Proceedings of SIGGRAPH Asia 2009)

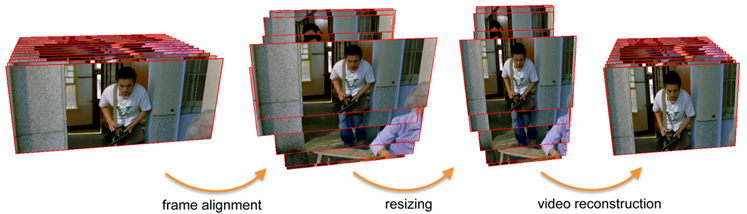

Overview of our automatic content-aware video resizing framework. We align the original frames of a video clip to a common coordinate system by estimating interframe camera motion, so that corresponding components have roughly the same spatial coordinates. We achieve spatially and temporally coherent resizing of the aligned frames by preserving the relative positions of corresponding components within a grid-based optimization framework. The final resized video is reconstructed by transforming every video frame back to the original coordinate system.

PDF (24M)

Supplemental results: illustrations, more resizing results

author = {Yu-Shuen Wang and Hongbo Fu and Olga Sorkine and Tong-Yee Lee and Hans-Peter Seidel},

title = { Motion-Aware Temporal Coherence for Video Resizing},

journal = {ACM Trans. Graph. (Proceedings of ACM SIGGRAPH ASIA)},

year = {2009},

volume = {28},

number = {5},

}

We would like to thank the anonymous reviewers for their constructive comments. We thank Hui-Chih Lin for the video production and Andrew Nealen for the video narration. We also thank Miki Rubinstein for providing the seam carving implementation used in our comparisons and Joyce Meng for her help with the video materials and licensing. The used video clips are permitted by ARS Film Production, Blender Foundation and MAMMOTH HD. Yu-Shuen Wang and Tong-Yee Lee are supported by the Landmark Program of the NCKU Top University Project (contract B0008) and the National Science Council (contracts NSC-97-2628-E-006-125-MY3 and NSC- 96-2628-E-006-200-MY3), Taiwan. Hongbo Fu is partly supported by a start-up research grant at CityU (Project No. 7200148). Olga Sorkine's research is supported in part by an NYU URCF grant and an NSF award IIS-0905502.